When you’re thinking about what infrastructure you’re going to deploy and what cloud you’re going to use, nine times out of ten you’re most likely going to go the Infrastructure-as-Code route. Not only does it allow for repeatability, but it allows the entire configuration to be coded and stored in one location so the rest of your team can use it.

In this blog post, you’ll learn how CloudTruth can make this process easier to deploy Kubernetes with Terraform in an AWS environment.

Prerequisites

To follow along with this blog post, you’ll need:

- An AWS account. If you don’t have one, you can sign up for a free trial here.

- The AWS CLI configured with the

aws configurecommand. You can find a tutorial on how to do that here. - Terraform installed, which you can find here.

- A code editor or IDE, like VS Code

- A free CloudTruth account, which you can sign up for here.

- The CloudTruth CLI, which you can download here.

Thinking About Reusable Code to Deploy Kubernetes with Terraform

Regardless of where an environment is, you need a way to make repeatable processes. Not just some automation code to make your life easier, but true repeatability that can be run over and over again by multiple teams for years to come. It’s pretty much the equivalent of creating a software solution from a thought-process and architecture perspective, except its infrastructure code.

Variables and parameters got us halfway there with infrastructure code, but the problem is you still have to manage them in source control and constantly change them depending on the environment (dev, staging, prod, etc.).

CloudTruth gets you the rest of the way there with a true, centralized solution that you can pull any parameter, secret, and variable from. Now, you don’t have to worry about managing multiple state files, workspaces, and infrastructure-as-code files. You can have one configuration that can be used across any environment. This approach is the best way to deploy Kubernetes with Terraform in the noble pursuit of more perfect deploys.

Configuring CloudTruth

Prior to running the Terraform code, you must ensure that the project in CloudTruth is configured. Getting the project along with Terraform variables up and running takes a few minutes.

The Project

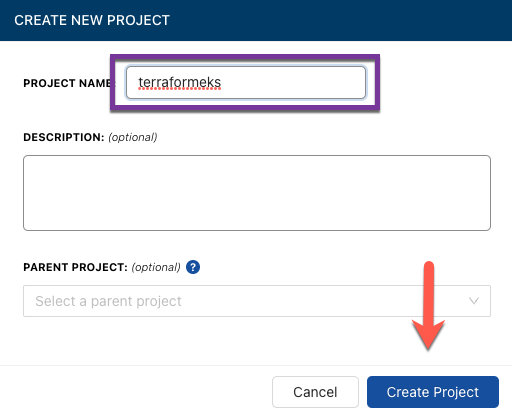

Click on Project —> Add Project

Give your project a name and then click the blue Create Project button.

The Parameters

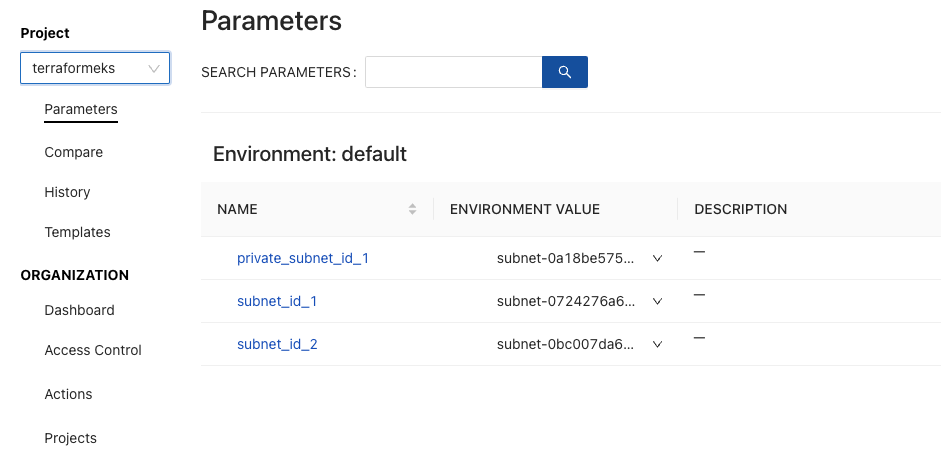

Inside of the CloudTruth project, you want to ensure that the proper Terraform variable values are available. For the EKS configuration, you’ll have three subnets available in AWS.

Run the following commands and replace the values with the proper subnet IDs from your AWS account:

cloudtruth --project terraformeks parameter set --value subnet-your_subnet_id_ 1 subnet_id_1

cloudtruth --project terraformeks parameter set --value subnet-your_subnet_id_2 subnet_id_2

cloudtruth --project terraformeks parameter set --value your_subnet_id_3 private_subnet_id_1After running the commands, you’ll be able to see the parameters inside of CloudTruth.

The Terraform Code

Now that your CloudTruth project and parameters are configured, it’s time to prepare the code for deployment. There will be two Terraform files:

main.tfvariables.tf

Because you’re using CloudTruth, you don’t have to worry about a .tfvars file as CloudTruth is handling all of the Terraform variables for you.

The Main.tf

Let’s break the code down into bits to understand what’s happening at each step.

First, you’ll have the provider information for AWS.

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

}

}

}Next, you’ll have your first resource which is to create the needed role for EKS to run along with the AmazonEKSClusterPolicy policy. The reason why you only need that policy and not EC2 policies is because this EKS cluster will have a Fargate profile, so no EC2 instances will be needed as worker nodes.

resource "aws_iam_role" "eks-iam-role" {

name = "cloudtruth-eks-iam-role"

path = "/"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "eks.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

EOF

}

## Attach the IAM policy to the IAM role

resource "aws_iam_role_policy_attachment" "AmazonEKSClusterPolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

role = aws_iam_role.eks-iam-role.name

}Next, the resource for the EKS cluster itself.

resource "aws_eks_cluster" "cloudtruth-eks" {

name = "cloudtruth-cluster"

role_arn = aws_iam_role.eks-iam-role.arn

vpc_config {

subnet_ids = [var.subnet_id_1, var.subnet_id_2]

}

depends_on = [

aws_iam_role.eks-iam-role,

]

}Because the EKS cluster will have a Fargate profile, you also need to create an IAM role for Fargate along with attaching policies to the IAM role.

resource "aws_iam_role" "eks-fargate" {

name = "eks-fargate-cloudtruth"

assume_role_policy = jsonencode({

Statement = [{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "eks-fargate-pods.amazonaws.com"

}

}]

Version = "2012-10-17"

})

}

resource "aws_iam_role_policy_attachment" "AmazonEKSFargatePodExecutionRolePolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSFargatePodExecutionRolePolicy"

role = aws_iam_role.eks-fargate.name

}

resource "aws_iam_role_policy_attachment" "AmazonEKSClusterPolicy-fargate" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

role = aws_iam_role.eks-fargate.name

}The last bit of code is to create the Fargate profile itself.

resource "aws_eks_fargate_profile" "cloudtruth-eks-serverless" {

cluster_name = aws_eks_cluster.cloudtruth-eks.name

fargate_profile_name = "cloudtruth-serverless-eks"

pod_execution_role_arn = aws_iam_role.eks-fargate.arn

subnet_ids = [var.private_subnet_id_1]

selector {

namespace = "default"

}

}When the code is all put together in the main.tf file, it should look like the below:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

}

}

}

# IAM Role for EKS to have access to the appropriate resources

resource "aws_iam_role" "eks-iam-role" {

name = "cloudtruth-eks-iam-role"

path = "/"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "eks.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

EOF

}

## Attach the IAM policy to the IAM role

resource "aws_iam_role_policy_attachment" "AmazonEKSClusterPolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

role = aws_iam_role.eks-iam-role.name

}

## Create the EKS cluster

resource "aws_eks_cluster" "cloudtruth-eks" {

name = "cloudtruth-cluster"

role_arn = aws_iam_role.eks-iam-role.arn

vpc_config {

subnet_ids = [var.subnet_id_1, var.subnet_id_2]

}

depends_on = [

aws_iam_role.eks-iam-role,

]

}

resource "aws_iam_role" "eks-fargate" {

name = "eks-fargate-cloudtruth"

assume_role_policy = jsonencode({

Statement = [{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "eks-fargate-pods.amazonaws.com"

}

}]

Version = "2012-10-17"

})

}

resource "aws_iam_role_policy_attachment" "AmazonEKSFargatePodExecutionRolePolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSFargatePodExecutionRolePolicy"

role = aws_iam_role.eks-fargate.name

}

resource "aws_iam_role_policy_attachment" "AmazonEKSClusterPolicy-fargate" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

role = aws_iam_role.eks-fargate.name

}

resource "aws_eks_fargate_profile" "cloudtruth-eks-serverless" {

cluster_name = aws_eks_cluster.cloudtruth-eks.name

fargate_profile_name = "cloudtruth-serverless-eks"

pod_execution_role_arn = aws_iam_role.eks-fargate.arn

subnet_ids = [var.private_subnet_id_1]

selector {

namespace = "default"

}

}The Variables.tf

Throughout the main.tf configuration above, you saw a few variables for the subnets. Those are the variables that you’ll need to create a variables.tf file for.

The file should contain the following code:

variable "subnet_id_1" {

type = string

}

variable "subnet_id_2" {

type = string

}

variable "private_subnet_id_1" {

type = string

}Because CloudTruth is handling the values for the three variables, you don’t have to worry about setting defaults or passing them in at runtime with a .tfvars file.

Running The Code

Once the main.tf and variables.tf are created, it’s time to run the configuration. To run the Terraform code, you’ll use the CloudTruth CLI. The Terraform commands will be the same. They’ll just be embedded into the CloudTruth CLI.

cloudtruth --project "terraformeks" --env development run -- terraform init

cloudtruth --project "terraformeks" --env development run -- terraform plan

cloudtruth --project "terraformeks" --env development run -- terraform apply -auto-approveWhen you run the commands, you’ll notice that the output is no different from the standard Terraform output.

Wrapping Up

Managing variables, secrets, parameters, and Terraform configurations isn’t difficult, but it is cumbersome. There’s a lot that’s needed; from where to store the configs to when they need to change and who has access to them.

With CloudTruth, all of that is abstracted and handled for you.

If you’d like to get started with CloudTruth today, you can try it for free at the link here.

Join ‘The Pipeline’

Our bite-sized newsletter with DevSecOps industry tips and security alerts to increase pipeline velocity and system security.